The challenge

In a typical waterfall software development project, testing takes place immediately before that release is released into production. This means that when bugs or usability issues are found at this stage, the release ends up being delayed until the issues are fixed.

In this model, testing becomes a bottleneck that seriously impedes the ability to deliver projects on time, causing great damage to the company's business. Another big problem with this practice is the cost to correct the defect later.

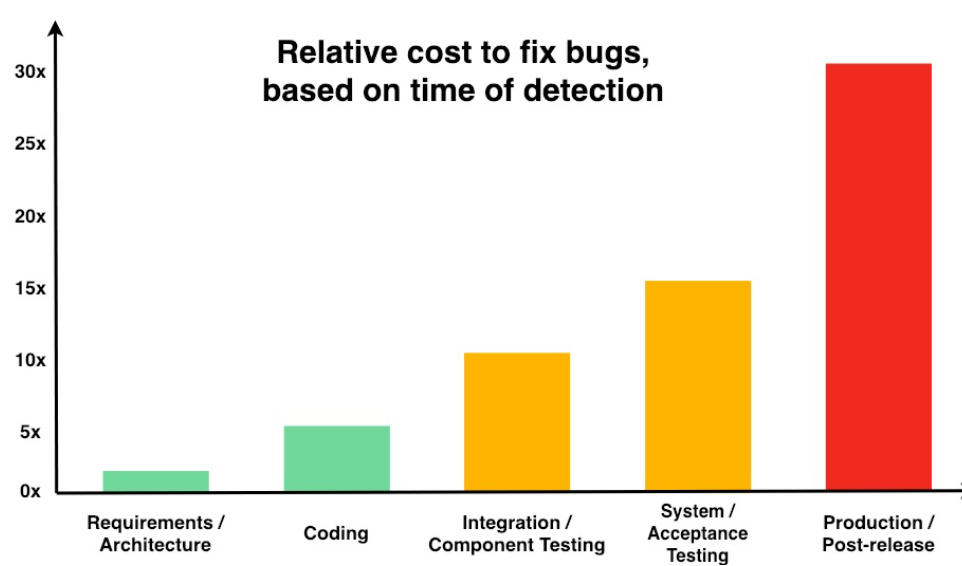

The following chart, taken from articles by the National Institute of Standards and Technology (NIST), helps to visualize how the effort to detect and correct defects increases as software moves through the five major phases of software development.

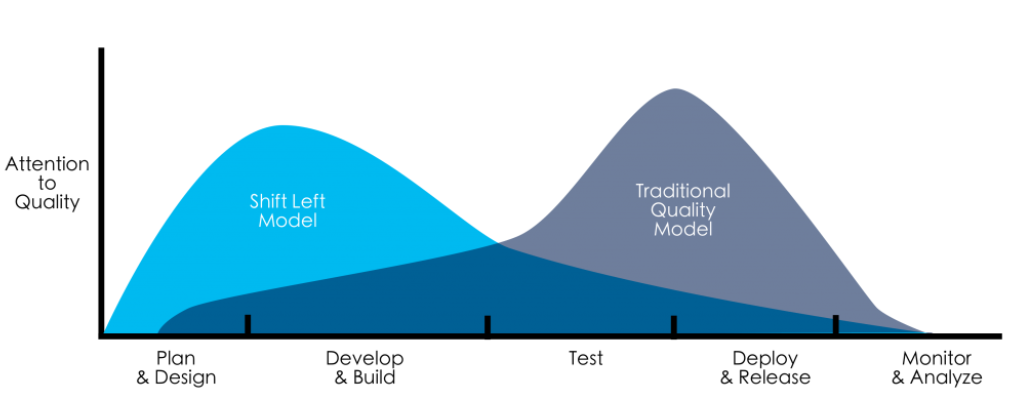

Shift Left Testing promises to improve this type of scenario by involving test teams early in the process. Problems, whether in design or code, can be addressed early on before they become important. In fact, the shift to the left has less to do with problem detection and more to do with problem prevention.

But how to bring agility to mainframe platforms?

If all these problems can be a challenge on the distributed platform, where the practice of DevOps and agile methodologies are much more common, imagine the scenario in development environments on the mainframe platform.

As it is a centralized platform, with high costs and a shortage of skilled labor, the scenario is much more complex.

Currently, most projects involve both platforms – Distributed Platform and z/OS Platform, where, if one side is late, the tests can be compromised. And due to the centralized structure of the mainframe, delays are common, which end up impacting the company's business.

And the current market is very competitive. The cost of delaying products to the business areas can have a considerable financial impact.

Ensuring quality and anticipating basic problems

During coding, using software static analysis tools is one of many options for locating potential bugs, performance issues, and inefficiencies in the software. Like compilers, static analyzers take a program as input and generate code quality reports, pointing out possible problems, use of prohibited features, or that are considered harmful by the company.

With the implementation of this type of tool, already at the time of compiling the code, it is possible to anticipate some situations even before this code proceeds to the steps of functional tests.

There are several tools available on the market for Distributed Platform, however, what about the Mainframe platform? For the mainframe platform, Eccox has unique tools, such as Eccox QC for Cobol and Eccox QC for DB2, which perform code evaluation directly on the mainframe, bringing agility to the results and helping to guarantee the quality of program delivery. This serves as a first filter and can decrease the amount of problems encountered during the testing phase.

Component Testing

The first major challenge at the time of unit testing is infrastructure. Often, for the execution of unit tests, it is difficult to test programs that interact with other resources, whether between platforms or between systems. For this, there are virtualization tools, which simulate the execution of the integration of external resources, and also debugging tools, where, in the absence of data to fulfill a given test case, it is possible to change the data at runtime. These tools help but do not guarantee functionality during the real production situation. They only serve to anticipate more basic errors.

And to advance to integration tests, it is often necessary to wait for the release of the integration testing environment to avoid program version conflicts and/or data changes, or else, run the risk of joining tests that may conflict and generate results. unexpected.

Integration

This is where a bottleneck often occurs, especially when it comes to the Mainframe platform. Often online (CICS/IMS) there is a limitation of testing one program version at a time, and also, the sharing of a single database, either DB2 or VSAM. A change in some data can completely change the result expected by another program in another test that accesses the same table or file. And to avoid this type of situation, many companies end up creating an entire structure of copies, whether they are entire LPARs or simply libraries, databases, CICS regions, and IMS regions, in order to maintain certain parallelism. However, it entails a high cost not only of software but also of maintenance and updating of the environment. And it has a limited amount of parallelism.

How to anticipate tests

Bearing in mind that the sooner defects are found and fixed, the project costs, as well as the delivery time, can drastically decrease, which would make sense to start integrated testing as soon as possible. But, how can parallelism not end up worsening the situation in an environment full of conflicts? Often, mixing tests from different projects or different users in the same environment can be more harmful than putting together a priority order and testing one at a time. This could bring more quality in tests, but with a longer deadline for deliveries.

We know that, if the program is still in the initial testing phase, placing it in an integrated environment could destroy data and impact the tests of other users.

And if it were possible within the same infrastructure with CICS, IMS, and DB2 you could isolate the executions so that each one can do their tests in isolation, both for versions of programs and for data, whether DB2 or VSAM?

Unlike most market tools that work with virtualization, Eccox has a unique tool on the market that allows the execution of tests in parallel, with an unlimited amount of parallelism using the concept of a container, the Eccox APT. With this, it is possible to anticipate the tests for versions of programs directly from the change packages without impacting the environment, since the bases can be isolated (cloned). So, even if the program still has some logic defect and will destroy data, with the isolation of the bases, this type of problem does not affect the execution of the other tests. And with a simple button on the WEB, it is possible to recompose the data from the official environment. Automation reduces human errors, increases trust, speeds up delivery, and leaves more time for people to focus on more challenging/inspiring tasks than spending days doing exhausting activities!

Container

The container provides a way to run multiple isolated workloads on a single instance of the System, which in this case can be CICS, IMS, or DB2. Using this concept, it is possible to isolate the test without the need to clone the complete System. The container can be mounted only with the desired resources, and the other resources that are not in the container will be used in the version of the environment itself.

AUTHOR: Milene Oliveira, IT Solutions Consultant.

Related solutions: Eccox QC, Eccox APT.

Sources: Computerworld; BMC Blog; NIST